These articles are not designed to offer definitive answers or fixed positions. Instead, they are explorations—reflections grounded in history, data, and evolving thought. Our aim is to surface questions, provide context, and deepen understanding. We believe education thrives not in certainty, but in curiosity.

How do we bet on value?

On a Thursday morning in mid-June 2025, the tech world absorbed a jolt: Meta unveiled a $14.3 billion moonshot initiative—a new Superintelligence Lab, backed by legendary AI investor Alexandr Wang and staffed by researchers commanding salaries north of $100 million. Zuckerberg didn’t mince words. Meta, he declared, was ready to invest “hundreds of billions” in the pursuit of superintelligence.

The term, once reserved for science fiction or ivory tower speculation, now sits atop pitch decks, board agendas, and late-night term sheet negotiations. As the race to build machines smarter than humans accelerates, private equity firms, startups, and institutional investors are grappling with a central question: Where is the real value, and how do we not miss it?

This is not just a new lab—it’s the emergence of Superintelligence Centers as a geopolitical, financial, and technological inflection point. What DARPA was to the Cold War and Bell Labs was to telecommunications, these centers may become to the next era of industrial intelligence.

Let’s unpack the opportunity space—and how to position yourself to win in the short, medium, and long term.

📍 Defining the Superintelligence Center

What is a Superintelligence Center?

Functionally, it’s an institutional-grade research and computation hub dedicated to the safe and scalable development of machine intelligence that surpasses human cognition. Philosophically, it’s a declaration of war on latency—on the idea that humans must be in the loop.

Superintelligence, unlike AGI, is not just about mimicking humans. It's about surpassing them, creating entities capable of reasoning across trillion-parameter contexts, seeing across modalities, optimizing across markets, and learning at speeds evolution never anticipated.

Meta’s new lab—helmed by Wang, not LeCun—isn't just building models. It's building capacity: compute, data pipelines, simulation environments, brain-scale algorithms, and a workforce of elite researchers with no commercial pressure to ship product—yet.

Compare it to Safe Superintelligence Inc., the startup led by Ilya Sutskever, which promises to build and release a safe version of superintelligence only when ready. We are witnessing a divergence: some labs chase scale and speed; others pursue caution and control.

Both are needed. But here’s what matters to investors and executives: these labs are going to redraw the boundaries of what a company can be.

📍What Superintelligence Really Means — And Why We Haven’t Met It Yet

In the great theater of artificial intelligence, “superintelligence” plays the part of both prophet and ghost. We invoke it in hushed tones at venture dinners, paint it in glowing terms on funding decks, and whisper of it in boardrooms like a secular second coming. But what is superintelligence, really? And where—on the crowded stage of today's AI actors—does it actually stand?

A group of researchers from Microsoft and leading academic institutions recently attempted to clarify this with something Silicon Valley sorely needed: a grid.

Imagine a matrix—simple on its surface, but philosophically loaded—where the vertical axis charts performance (from “emerging” to “superhuman”), and the horizontal axis charts generality (from narrow, task-specific AI to broad, human-like adaptability).

From the bottom left, where calculator software and grammar checkers hum with modest precision, to the top right corner—still blank—where Artificial Superintelligence (ASI) is supposed to live, the grid exposes our collective ambition: to move machines not just upward (faster, better, stronger), but outward—toward something more human, more flexible, more aware.

The chart anchors superintelligence in Level 5 performance—that is, systems that outperform 100% of humans—combined with generality: the ability to operate across domains, understand metacognitive tasks, and learn in ways that aren't hand-fed. It’s not enough to beat us at Go or fold proteins with atomic accuracy; to be superintelligent, a system must understand poetry and planning, logic and lies, chess and child-rearing—all in the same mind.

In this framing, ChatGPT, Gemini, and other frontier models fall squarely in “Emerging AGI”—general, yes, but still at the competence level of skilled adults. Models like Deep Blue and AlphaFold? They’re “Superhuman,” but narrowly so, savants locked in single domains. No current system, the paper makes clear, inhabits the upper right corner where ASI is said to live.

And perhaps most provocatively, the authors argue that there is no smooth road from here to there. Intelligence may not scale like compute. In fact, recent research (e.g., from Apple and Arizona State) suggests performance may degrade as models attempt to solve complex, multi-step problems. Intelligence, it turns out, isn’t just about parameter count—it’s about architectural leaps, memory integration, and value alignment.

So while Meta, OpenAI, and Anthropic race to hire, train, and brand their way to superintelligence, the truth is more sobering: we don’t yet know how to measure it, much less manufacture it.

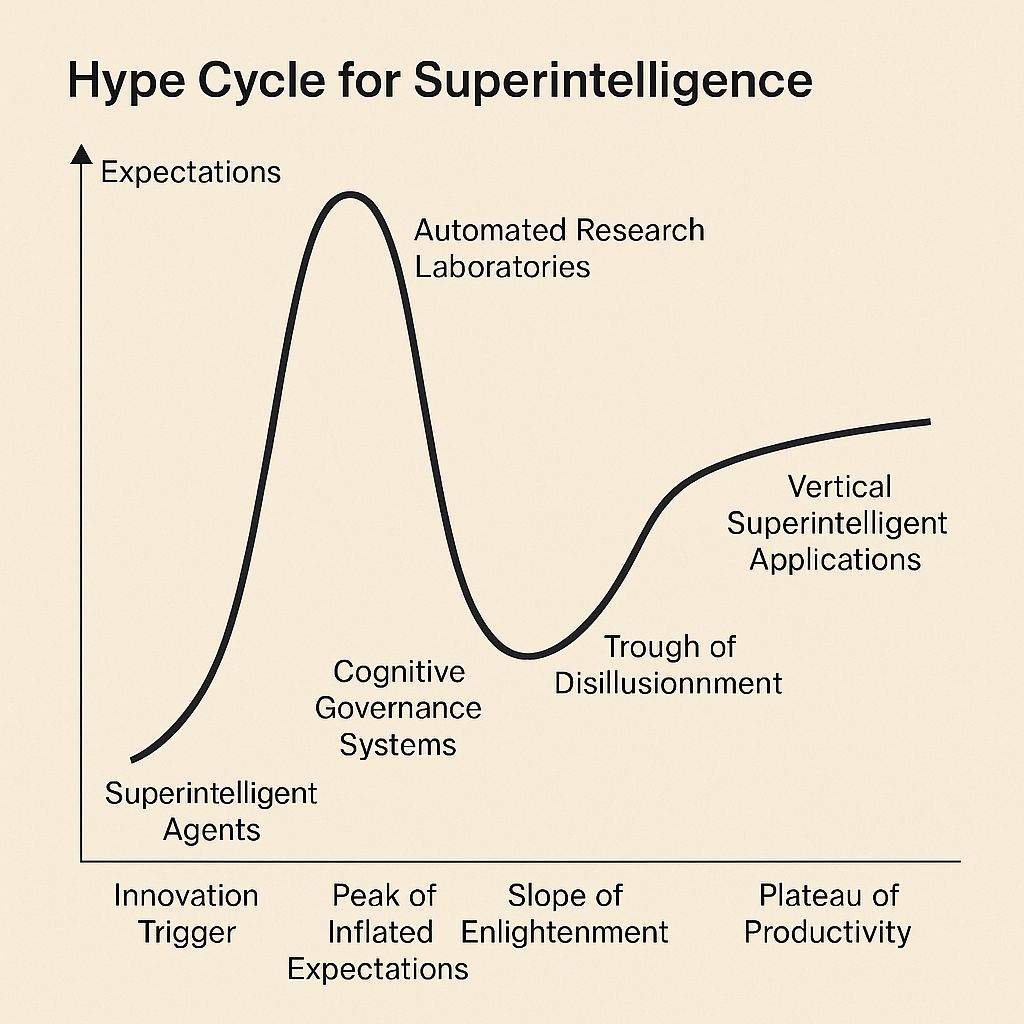

Still, the game is afoot. And as the hype cycle crests, the real players—the patient investors, the deep technologists, the cautious optimists—are staring at that blank quadrant in the top-right corner of the chart and asking the only question that matters:

What happens when the box is no longer blank?

🛣️ Opportunity Map: How to Position Across Time Horizons

🔹 Short Term (0–12 months): The Picks and Shovels Play

“In a race to the moon,

invest in the rocket fuel,

not the flag.”

Opportunities

Foundational Infrastructure: Invest in companies building cooling systems, power management, AI-native datacenter design, and energy sourcing (nuclear, hydrogen, geothermal).

Talent Aggregators: Back elite, nimble technical hiring platforms that place postdocs and AI researchers into superintelligence labs.

ModelOps & Evaluation Tools: These labs need tools to measure, validate, and interpret the behavior of increasingly opaque models. Think automated red teaming, eval-as-a-service, and synthetic task generation.

How to Play:

Back companies that are agnostic to which lab wins but essential to all labs. The core risk here is speed of commoditization—so invest in those with defensible IP or regulatory moats (e.g., audit frameworks).

🔸 Medium Term (1–3 years): The Governance & Applied Frontier

“A superintelligence center without a use-case

is like a jet engine without a cockpit.”

Opportunities:

Safety + Interpretability Startups: The more powerful these models become, the more urgent governance and safety frameworks will be. Startups that can define benchmarks, build simulators, or score alignment will be snapped up.

Vertical Intelligence Primitives: Superintelligence Centers will need “proving grounds” in sectors like finance, defense, bio, and law. Startups that offer high-integrity data, simulation environments, or digital twins in these verticals become crucial partners.

How to Play:

Partner with mid-market enterprises to co-develop vertical fine-tuning centers. Imagine co-owning the GPT-equivalent for SEC filings, clinical trials, or aerospace design. The real value is in contextualized intelligence.

🔶 Long Term (3–10 years): The Platform Shift

“Superintelligence won’t just build products.

It will rewrite capitalism.”

Opportunities:

Cognitive Operating Systems: Imagine platforms that allocate compute, context, and cognitive bandwidth dynamically, deciding which agent tackles which subproblem in a corporate workflow.

Equity in Cognitive Entities: Just as companies used to own mines or machinery, in the future they may own a self-improving expert agent—a form of corporate IP that grows, reasons, and advises.

How to Play:

Private equity should begin acquiring assets that have slow-turn, high-value proprietary data—legal discovery, medical imaging, legacy software systems. These become flywheels for training in a post-model world.

🧬 A Word of Caution: The Alignment Fog

Despite the hype, there is a growing consensus among AI veterans that we’re not there yet. As The New York Times noted, researchers at Arizona State and Apple have warned that model performance may degrade as reasoning complexity increases. Reinforcement learning, the current path, is noisy and unpredictable. Subbarao Kambhampati—a veteran AI researcher—sums it up bluntly: “You always need a human in the loop.”

This epistemic humility should shape investment theses. Many “superintelligence” claims are branding exercises—Zuckerberg knows it, Sutskever knows it, and the investors should too. The real edge lies in calibrated conviction: investing not just in raw scale but in architectures of alignment, trust, and explainability.

🏁 Cautionary Note: This Is Not the Internet

The development of superintelligence won’t echo the trajectory of cloud or mobile. This isn’t just a platform shift. It’s a civilizational shift. The 1990s was a remarkable time for computers. With it came the hype and then value.

We’re not just upgrading productivity. We’re rebuilding cognition—from first principles, at planetary scale.

Startups that survive this wave will do so not because they’re smarter, but because they chose the right questions to solve. Private equity firms that thrive will be those who look past the hype and see the gears behind the glamour—the infrastructure, governance, and ethics shaping the minds of the machines to come.

The Superintelligence Centers of 2025 are not just labs. They are the new central banks of cognition.

Invest accordingly.

📚 References & Further Reading

Reuters: Zuckerberg’s Superintelligence Bet

Zuckerberg says Meta will invest hundreds of billions in superintelligence

→ A critical signal of Meta’s capital allocation strategy and competitive paranoia.NYT: Inside Meta’s $14.3B Superintelligence Lab

Meta Unveils Its Superintelligence Lab Led by Alexandr Wang

→ Provides context for Meta’s internal challenges and ambitions in the AI arms race.OpenAI’s Technical Reports

OpenAI Research Index

→ Continuously updated and provides a clear sense of benchmark trends, limitations, and frontier techniques.